With Couchbase Server 3.0, you get a great new option to change the way we use memory for caching your keys and metadata. The new option is called “full ejection”. Here is how full ejection is different:

With 2.x, we cached all keys and metadata in memory and allowed ejection of values only. That is great for low-latency access to any part of your data. However some workloads don’t require low latency access to all parts of data and rather have memory reserved for ‘hotter’ parts of the working set. Imagine a massive database that is collecting telemetry from sensors or an IoT (internet of things) system that is gathering massive data points from all devices but mainly queries the last 24 hours of data. With 3.0, for large databases with a smaller active working set, you can turn on ‘full ejection’ and eject keys and metadata for parts of your data that is rarely accessed. Even if you consider keys and metadata small, with a large number of keys, the memory used for keys and metadata can add up. With the full ejection mode, you can more effectively use memory for caching larger parts of your working set.

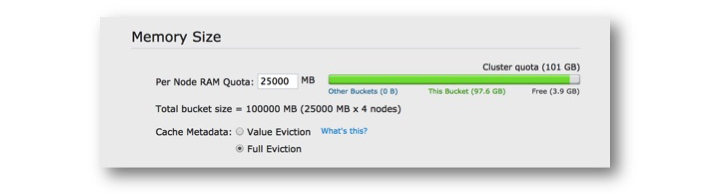

- Enabling the option is easy and can be done per bucket in the admin console. The change is transparent to apps so there is nothing that needs to be done on the application size to take advantage of the setting.

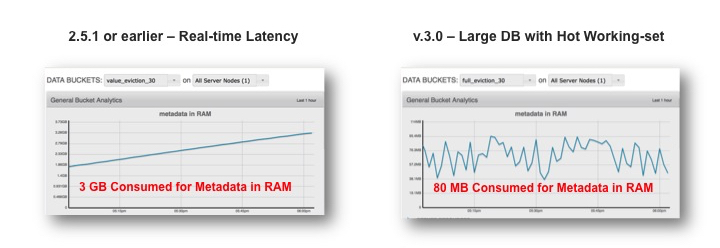

Lets look at some numbers to see how effective this can be for large databases. The graphs below plot the memory consumed for storing metadata during data load for both 2.5.1 and 3.0 clusters that have identical setup. 2.5.1 and previous 2.x versions behaves the same in the way they cache so this is repeatable with any 2.x version as well.

- Graph on the left shows the amount of metadata in memory over time in 2.x which runs in the value ejection mode (since that is the only mode of caching allowed).

- The graph on the right, shows the version 3.0 behavior under full ejection.

As data accumulates you can see that Couchbase Server 2.x consumes increasing memory hitting 3GB. On the right side, you see memory consumption around 80 MB with 3.0. With full ejection on Couchbase Server 3.0, the same operation consumes much smaller chunk of memory as it continuously ejects keys and metadata. The memory freed from ejection of keys and metadata is available to new keys and metadata as well as values of the data that is getting loaded. Orders of magnitude reduction in memory used for metadata… The test is done with replica count set to 1, value size averaging around .5K, and eventually both databases reach around 50Mil documents during the data load operation visualized in the graphs.

So what happens when the app needs to access keys and metadata that has been ejected in 3.0? There is a compromise here and Couchbase does additional IO to bring the key and metadata as well as values back into memory under full ejection. Operations like Add, Delete, Touch, CAS operations still need metadata. However Set has a special optimization: Couchbase can skip retrieval of key and metadata and simply assume the previous version is invalidated.

Before I close I want to make sure it is clear that the new full ejection option bring in a great deal of efficiency for large datasets. However, If you have applications that need aggressive low latencies for all data access, you should continue to use value ejection option. Cookie or session stores or shopping cart applications, profile stores are a great fit for pinning all keys and metadata in memory thus should use value ejection.

Many Thanks and as always all comments welcome.

-cihan